Invocal Reality — embedding story into spaces

The objects around us are heavily informed with stories about our spaces and relationships. Can these objects transform into interfaces to tell these stories?

Media Interaction Design

Collaborators

Role

Tools

10 weeks

Farhadiba Alti

Designer, Technologist

openFrameworks,

Arduino

Arduino

Goal

Build a novel, exhibitable interactive project under the theme of “merging the physical and digital worlds”, with attention to methods and materials.

Background

In our dynamic world, we are constantly surrounded by static objects in our lives, that silently observe us. What if these objects could share valuable hidden insights with us?

By nature, humans have consistently entertained the “magic” of the objects around us, and their ability to tell us stories about the places they reside in when we aren’t there.

How could we harness the multiple meanings of objects to transform them into tangible and natural user interfaces? Embedding technology into these everyday objects, what kind of digital information would be assistive and meaningful?

References: Durrell Bishop’s Marble Answering Machine, Hiroshi Ishii’s story about an abacus, Invoked Computing

Approach

Our approach was to create a reality where messages could be easilly left on objects, accompanied with carefully designed visual feedback.

Often, we being communication with memories triggered by associations. Objects can trigger associations which can trigger the starts to conversations. These can be either semantic or sentimental. For example, I could see a banana and think about how I want to ask my roommate if they want to bake banana bread this weekend. Or, I could see a banana and remember the last time we went grocery shopping, and how we passed by a new bookstore we wanted to check out sometime. Therefore, we wanted to create an environment where these messages could be left on the object themselves, which could result in a variety of scenarios — from utilitarian to highly personalized.

In “merging the digital and physical”, there is abundant opportunity to use the digital to extend the memory or mortality of the physical. In this project, the sharing of these spontaneous associations is no longer bound by, time nor circumstance, but rather transcends.

In our approach, we valued: craftsmanship, resourcefulness, and robustness.

Interaction

At the currently implemented scope of the project, a participant walks up to Invocal Reality and has a few options:

If there is an object present and no message, they can leave a message.

If there is an object present and there is a message, they can listen to the message.

If there is no object present, they can leave an object. They can also leave a message on the object.

Once the message was played, there is the option to put the object back (and leave another message etc), or place a different object.

The environment reacts as so:

A message can be left on any object within the environment by picking up the object and speaking to it.

If there is a message on an object, an indicator will appear around the object, with visual details corresponding to the nature of the object. Once the message is completely replayed, the indicator will disappear.

Implementation

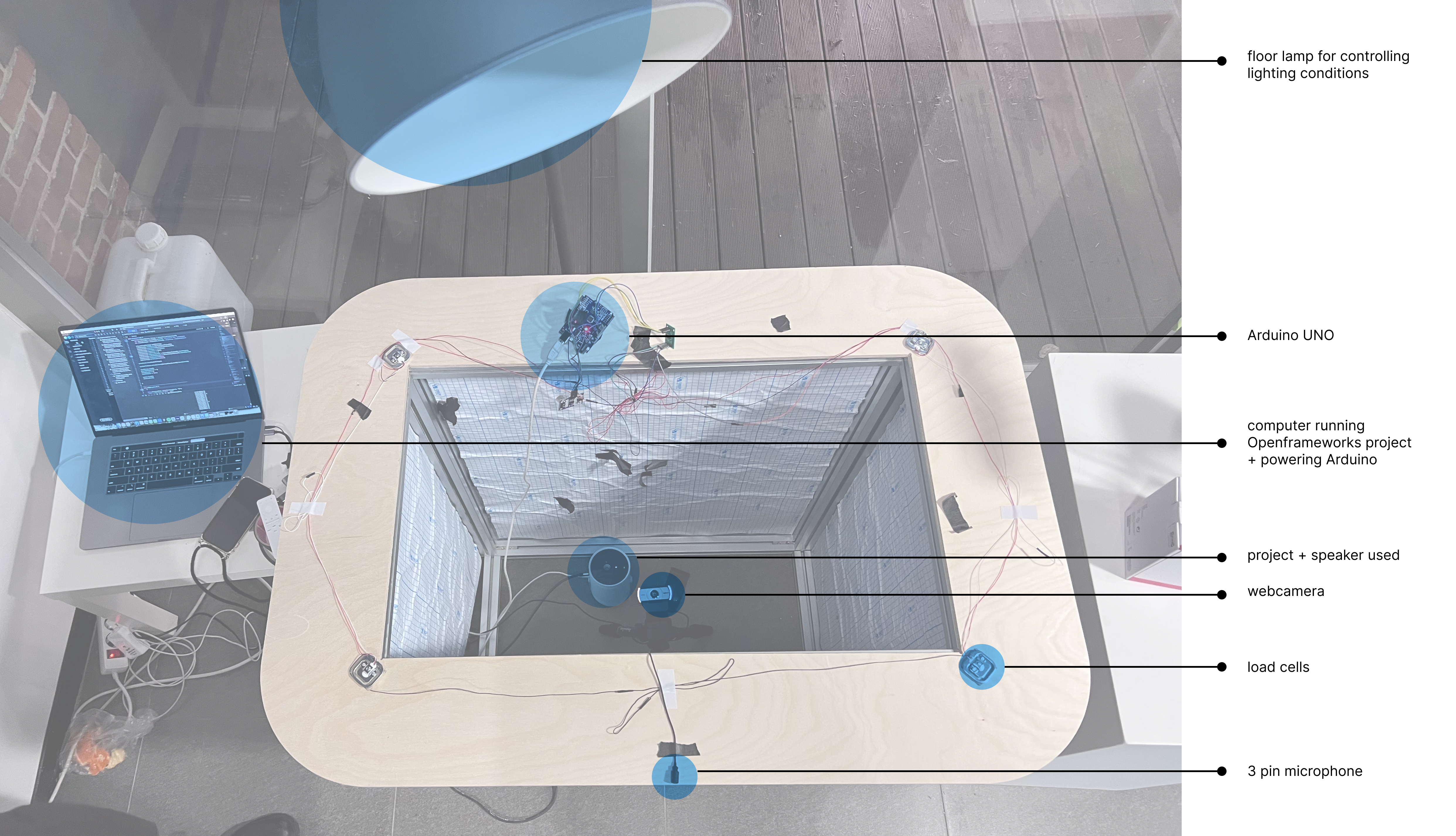

In this prototype, we scaled the scope of our reality down to an “interactive tabletop” that could detect one arbitrary object at a time.

The main project was written in Openframeworks using the OpenCV library for object/contour detection and additional libraries for sound recording and playback. A particle class was written as an visual indicator for 1) whether a message has been left on an object and 2) to show the length of the message. The length of the message was mapped to the hue of the particles, so that ie. a curt message would be a red and a longer message would be a dark blue. Other details such as sentiment analysis or the sender/intended recipient of the message could be programmed into the indicator using additional speech recognition libraries.

A serial input comes in from sensors wired to an Arduino Uno. The load cells signal the object state (picked up/put down). In future iterations, they could be adapted to provide persistence: tracking and remembering multiple objects at once. There are also ultrasonic distance sensors connected to detect when a person approaches the objects, which would subtly change the velocity and brightness/saturation of the particles to beckon the person towards the message.

My individual contributions include: form design and fabrication, material + technology choice, physical computing, serial communication, contour detection, and programming the particle system.

Gallery